How often do you use AI? I tracked my Sunday workday to find out. Between 4:30-9:00 PM (with a dinner break), I monitored every AI interaction while handling emails, analyzing data, & writing.

If the average American picks up their mobile phone 144 times per day & we call that addiction, I am using AI about a hundred times per hour. Is AI ten times more valuable than a phone?

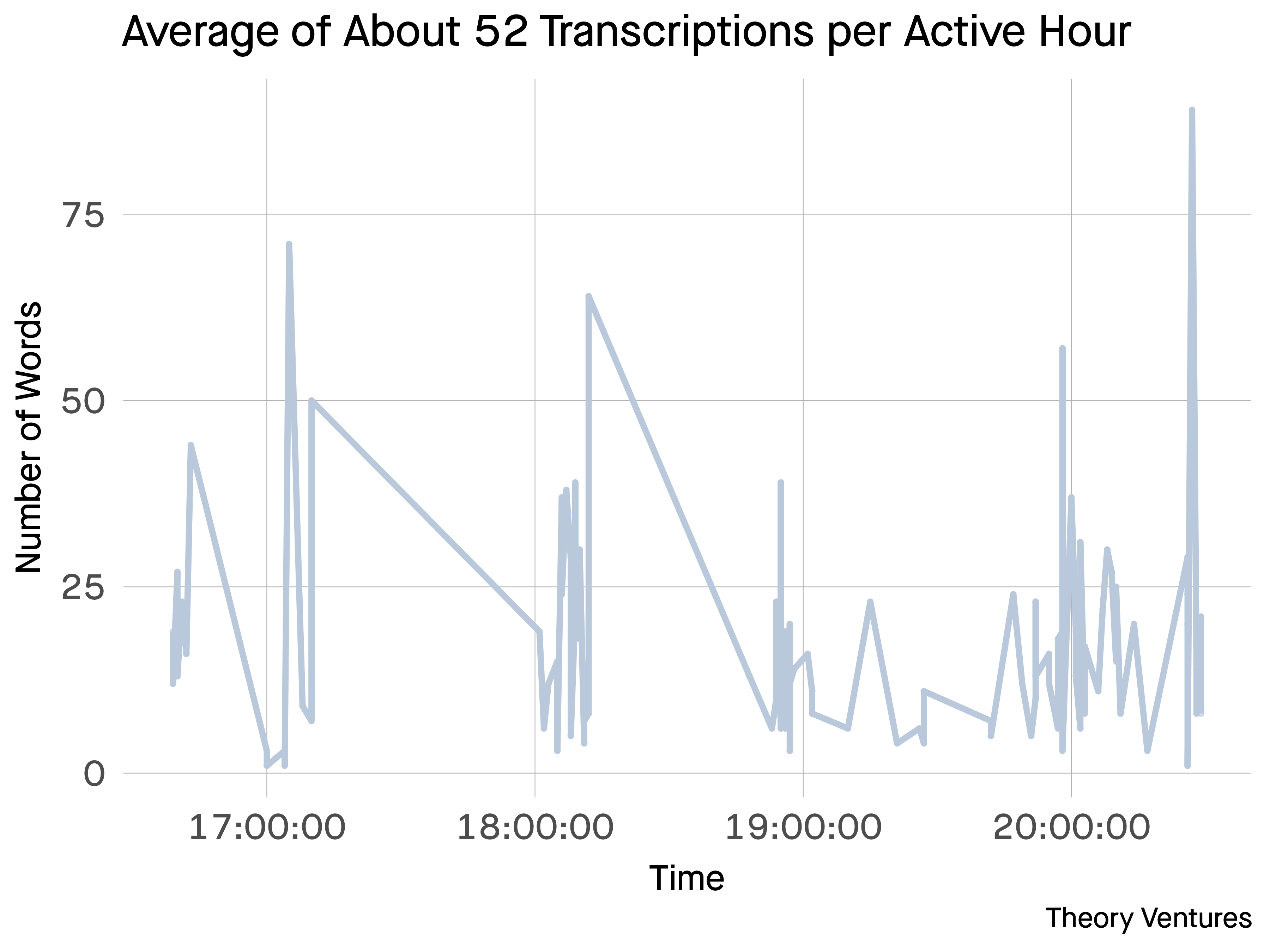

Speech

Dictation activity is bursty with breaks for dinner. But looking at 7pm & later, I’m hitting the speech API at least once every 2 minutes but closer to every 90 seconds on average. Around 8:00 PM is when I started to email - massive activity spike.

| Field | Value |

|---|---|

| Timestamps | 110 |

| Total Word count | 1998 |

| Average Words per Call | 18 |

| Calls per Hour | 51 |

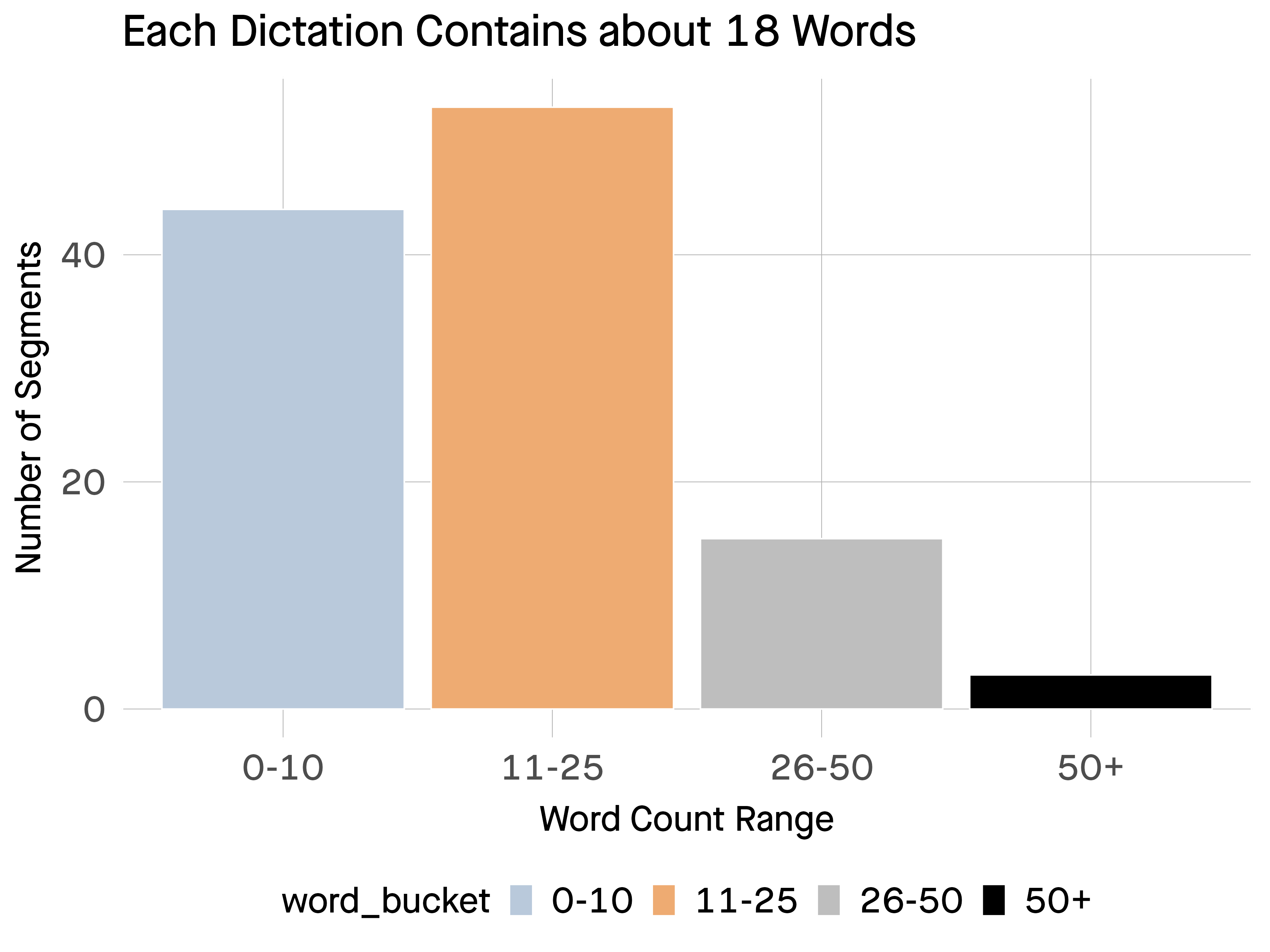

On average, the typical transcription contains about 18 words, but more than a quarter of them contain more than 50 words. Those longer tracts are typically entire email responses dictated in one shot.

Dictation is 3 times faster than typing, so it saves me an enormous amount of time.

Two AIs process my voice : initially a dictation AI and a language AI that edits for brevity & clarity (both of these run on my laptop).

Two AIs process my voice : initially a dictation AI and a language AI that edits for brevity & clarity (both of these run on my laptop).

Coding

| Field | Value |

|---|---|

| Venture Industry Analysis, lines of code | 267 |

| Speech analysis, lines of code | 121 |

| AI Chats | 12 |

| Estimated AI Calls per Hour | 32 |

Publishing data-driven blog post analysis is a key part from formatting the data to analyzing it using R and then publishing charts. All of this is now predominantly handled with prompts to an AI.

I can generate several hundred lines of code in 5-10 minutes. With the newer models, I expect this to collapse to 1-2 minutes.

In fact, I find myself growing reliant on the AI to the extent that I no longer remember some of the R syntax, A sign of working at a higher level of abstraction : one of the promises of AI.

Fully focused on work, I’m employing AI roughly 50-100 times per hour.

Within the last 24 months it’s clear that AI has become an essential coworker, perhaps at least as important as a mobile phone, but very likely more critical.

Choosing the Right AI Model : Cost vs Performance

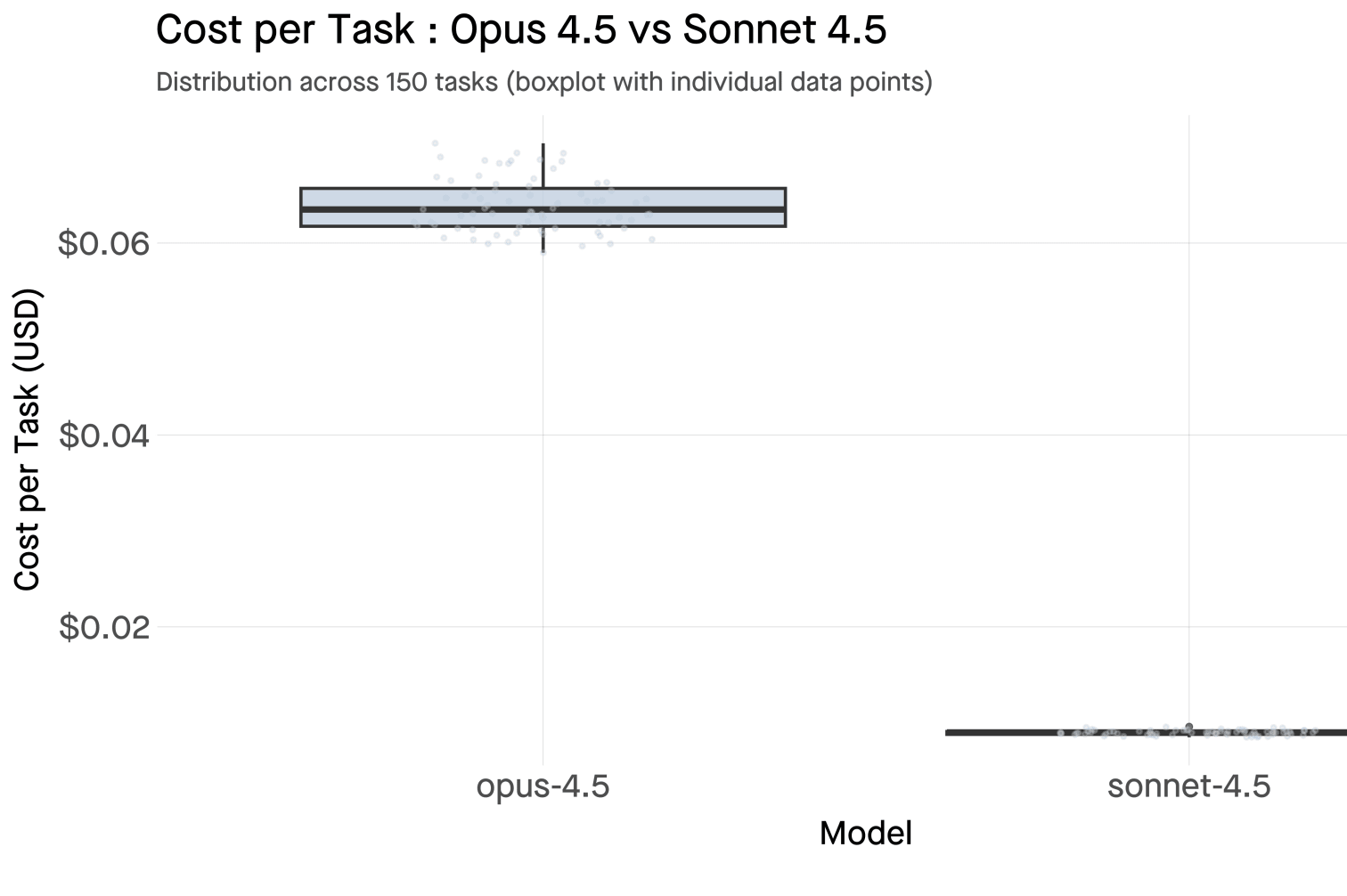

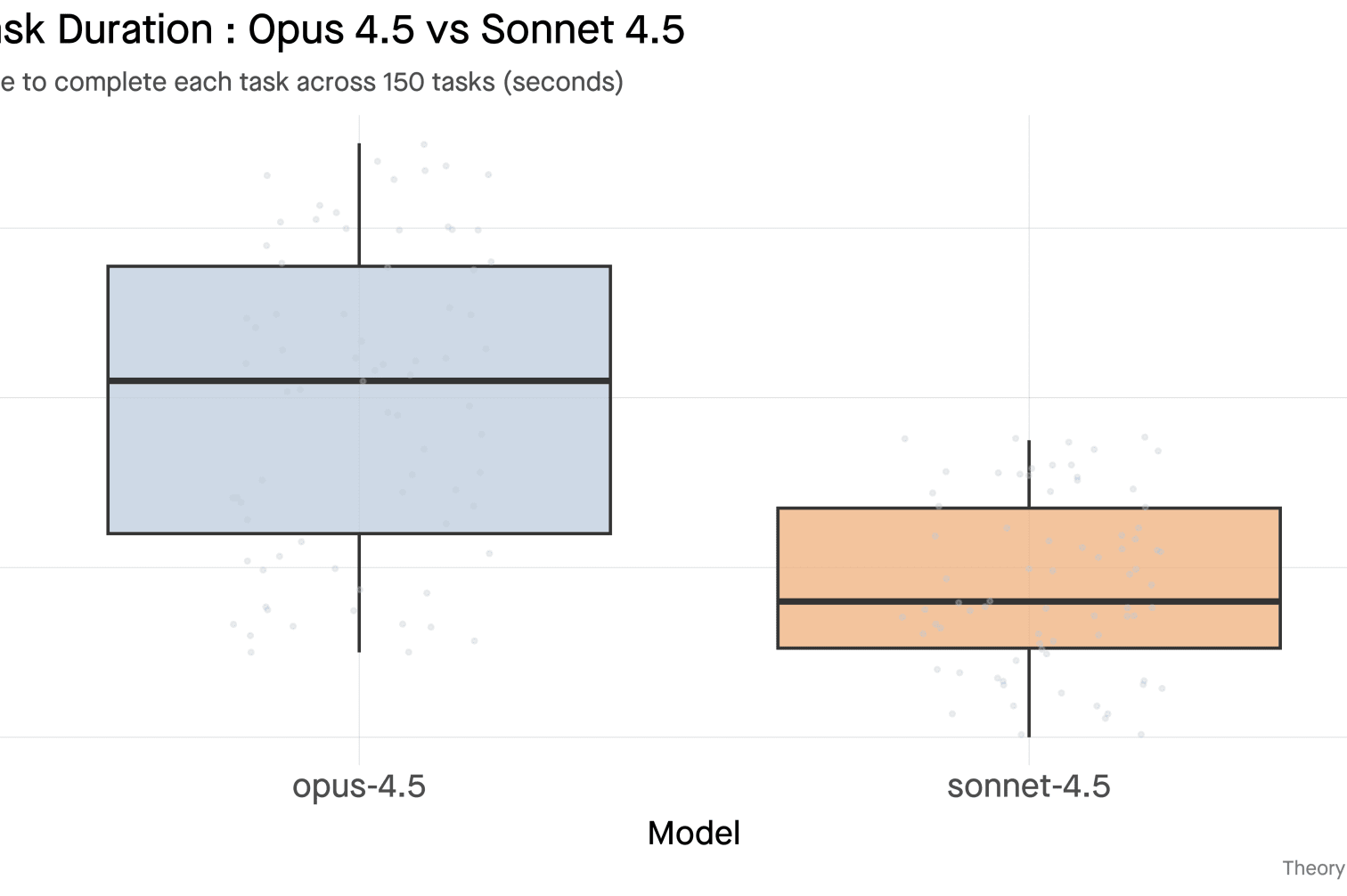

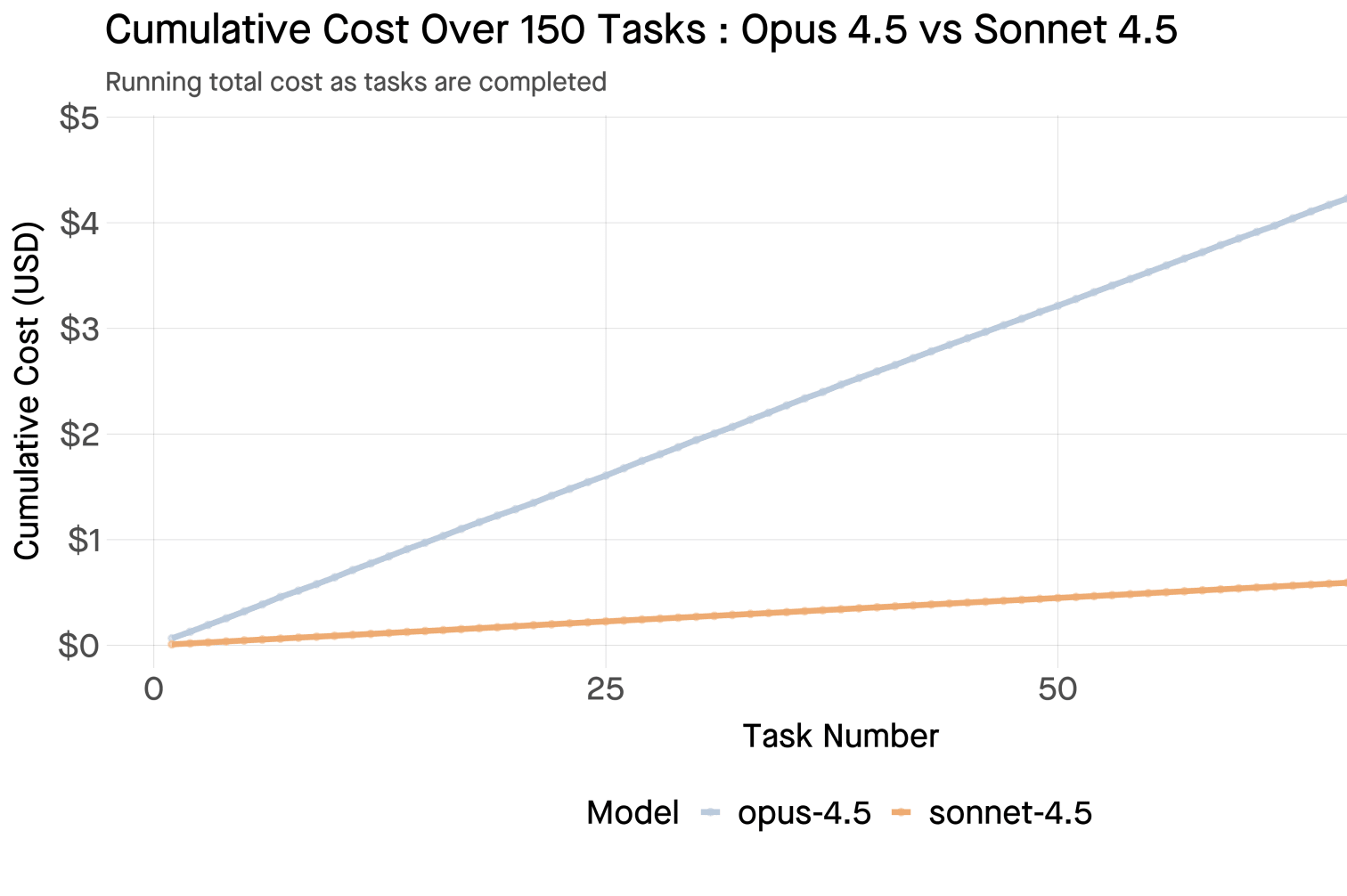

Beyond tracking how often I use AI, I also track which AI models deliver the best value. I analyzed 150 autonomous task executions from my Asana agent monitor comparing Claude Opus 4.5 versus Claude Sonnet 4.5.

The results show that choosing the right model matters enormously : Sonnet 4.5 delivers 86% cost savings while being 36% faster.

Cost Comparison

Opus 4.5 costs $0.064 per task on average, while Sonnet 4.5 costs $0.009 per task. At 50 tasks per day, that’s $1,002 in annual savings.

Token Efficiency

Sonnet 4.5 uses 27% fewer tokens (1,797 vs 2,456 per task), contributing to the cost advantage. The model generates more concise outputs while maintaining quality.

Performance

Sonnet 4.5 completes tasks in 38 seconds on average versus 59 seconds for Opus 4.5 - a 36% speedup. Faster iteration means more productivity.

Cumulative Impact

The cost difference compounds rapidly. After 150 tasks, Opus 4.5 has cost $4.79 while Sonnet 4.5 costs just $0.67. The savings trajectory accelerates with scale.

Hybrid Strategy

Not all tasks need Opus 4.5. I use a hybrid approach :

- Sonnet 4.5 (95% of tasks) : Email summaries, task routing, data extraction, routine automation

- Opus 4.5 (5% of tasks) : Complex analysis, investment memos, architectural decisions, ambiguous problems

This strategy delivers 76% cost savings versus using Opus exclusively while maintaining quality where it matters most.