Since July, have you noticed how much better your AI model has become? Measuring them is hard to do. All we can do is quantify the vibe : is this one better than that one?

Elo is a score that measures how often one model wins against another, as judged by a human. Which model answers the prompt : “Describe the differences in texture between a Pink Lady and a Macoun apple” better? The one with the higher Elo score.1

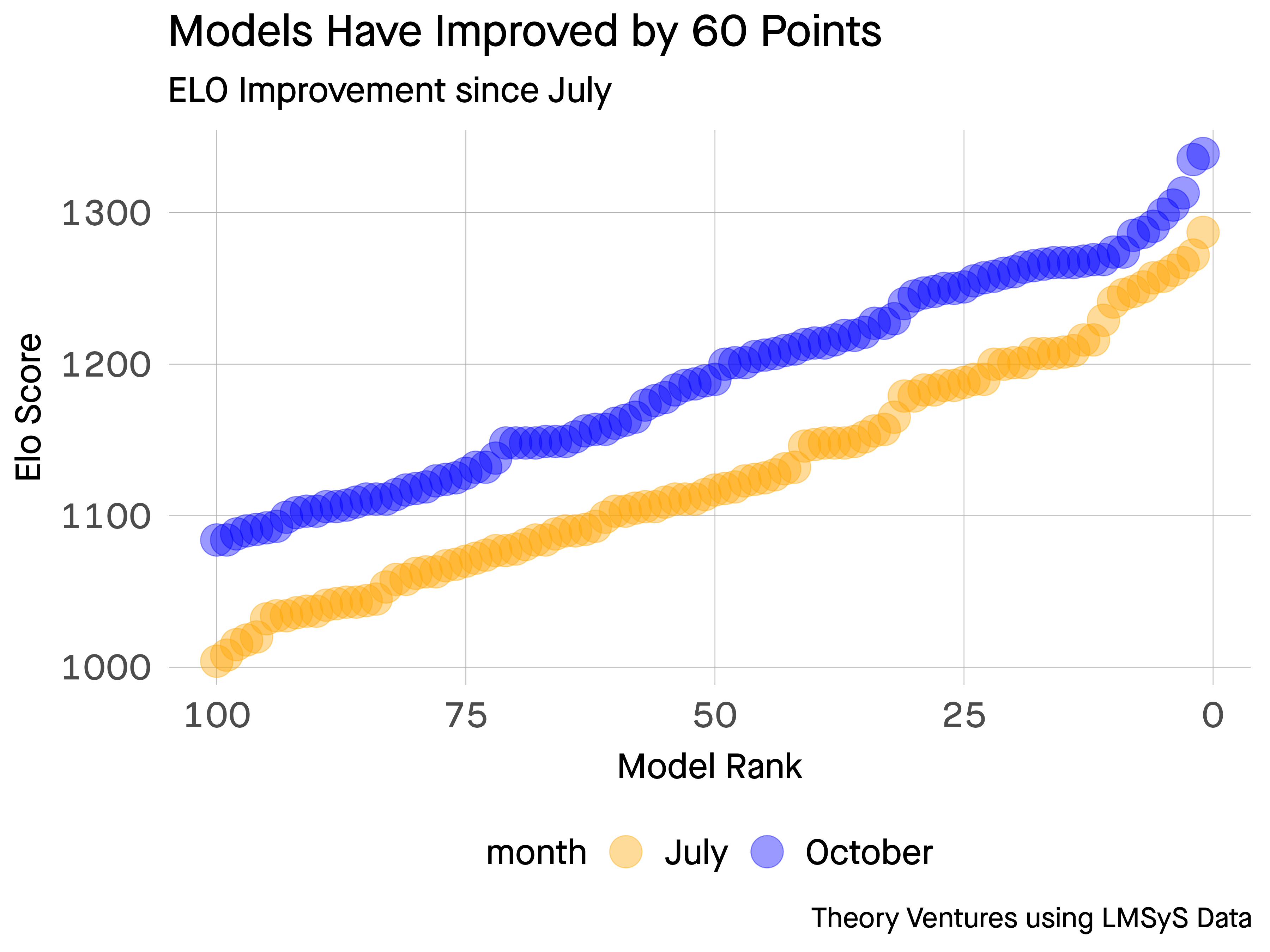

In the last four months, the top 100 models have improved their Elo by about 60 points, with the top models now at 1339 vs 1287 in July.

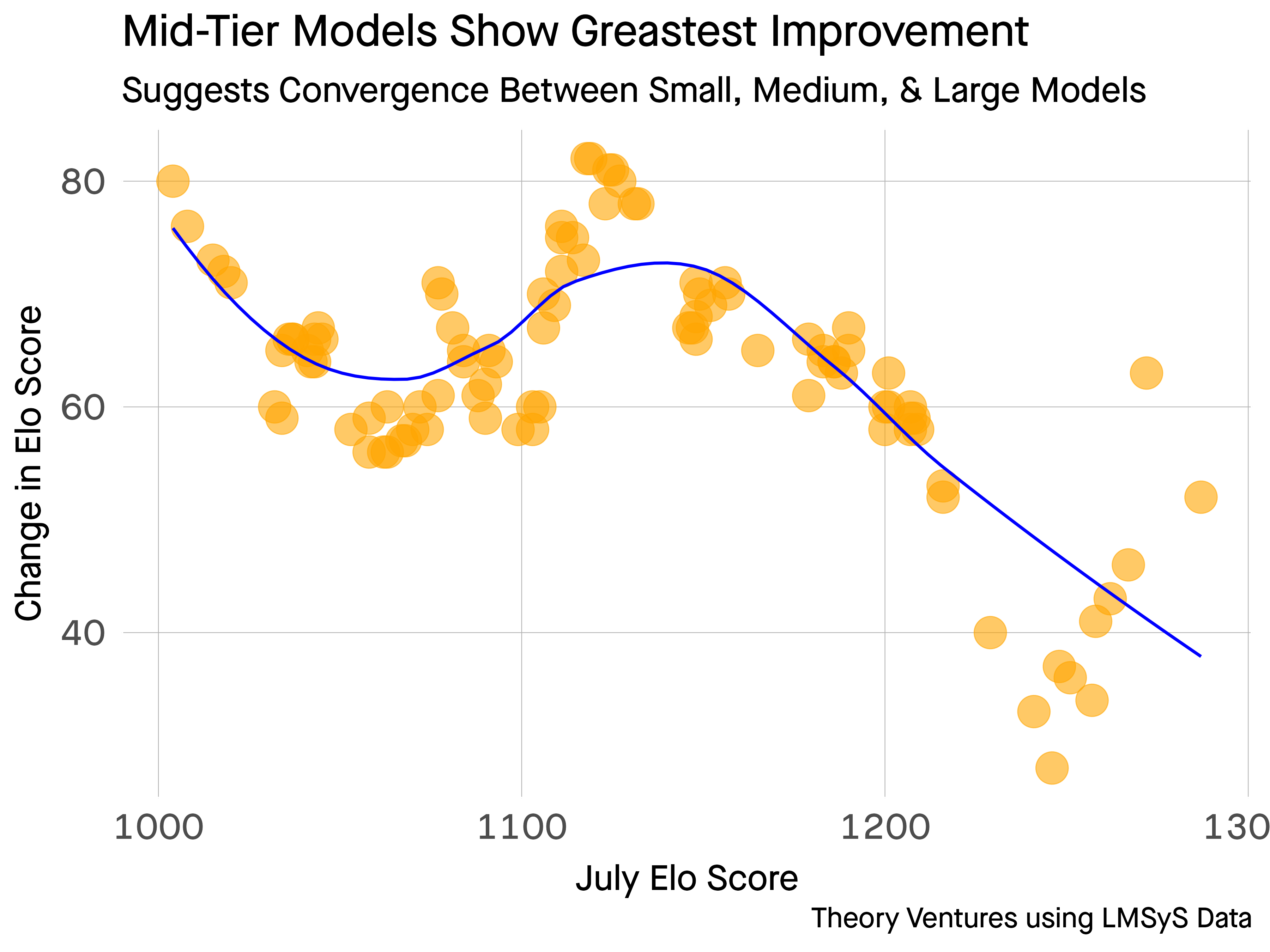

The biggest performance gains occurred at the center part of the distribution. Researchers have driven significantly more performance with innovations in algorithms.

The biggest performance gains occurred at the center part of the distribution. Researchers have driven significantly more performance with innovations in algorithms.

| Model Size | Win Probability Increase (%) | Definition |

|---|---|---|

| Small | 32.0% | < 10b parameters |

| Medium | 22.4% | 10b - 100b parameters |

| Large | 29.6% | 100 - 200b parameters |

| Mega | 25.9% | 200b+ parameters |

The smallest models have increased performance most. October models have increased their win rates by nearly a third in four months. All of the models have improved their competitive win rates by more than 20%.

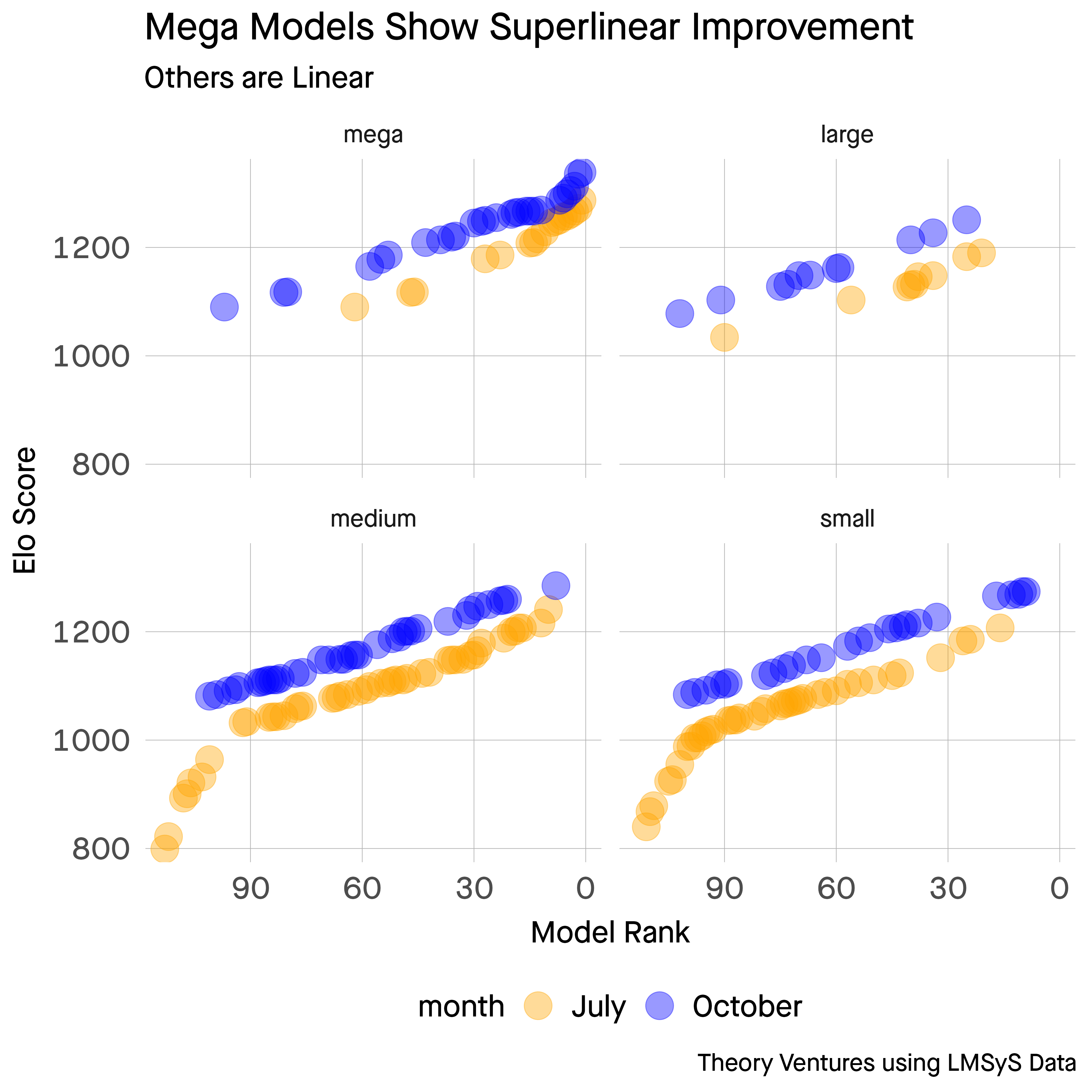

In July, we posed the question : what happens when model performance asymptotes? Progress in small, medium, & large models is linear in Elo-terms.

But the mega models show more data points of inflection, suggesting the recent innovations in reasoning & scale (the biggest models have grown from 200b parameters to more than 400b) have produced the beginning of a new high-growth S-curve.

1 See the Bradley-Terry model.