As generative AI captivates Startupland, startups will do what they have always done: integrate new technology to build transformative businesses.

Incumbents have seized the moment with Microsoft, Adobe, & others integrating generative AI into their products quickest.

In response, startups must develop moats to stake out their market. What are these moats?

At the moment, capital & technical expertise create competitive advantage. Models require millions of dollars & technical expertise to deploy: document chunking, vectorization, prompt-tuning or plugins for better accuracy & breadth.

But in the long-term, usage will be the enduring moat.

Machine learning systems, like any complex program, benefit from more use. The more queries against an ML system, the more the strengths & weaknesses of a system come to light. Product & engineering ply those insights to improve performance.

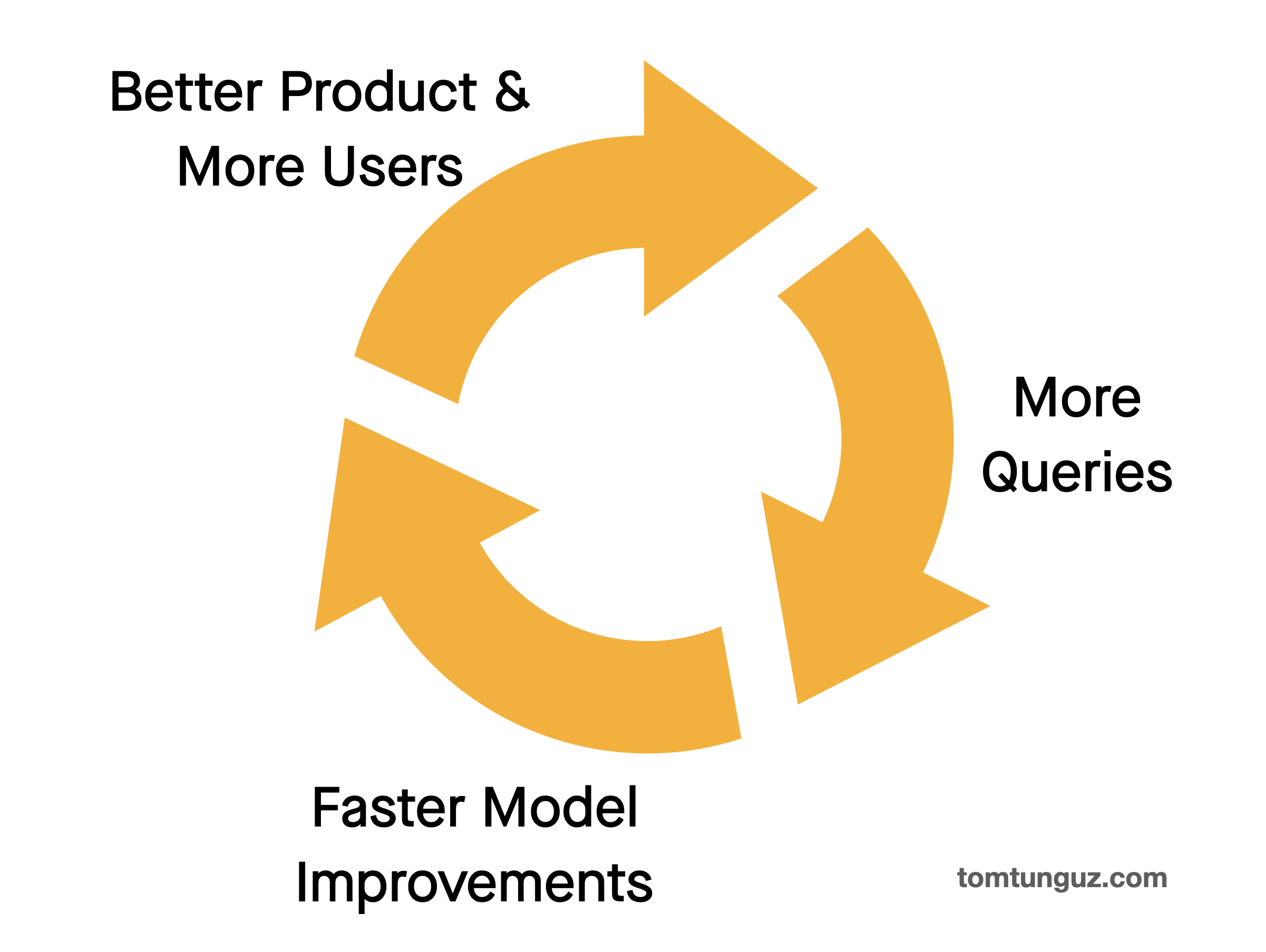

That process spins a flywheel. More queries -> more diagonostic data to improve the model -> a better product with more users.

In addition, researchers have observed an emergent property of machine learning models : something we didn’t anticipate but we can see. Researchers at MIT call this phenonemon Reflection.

In this experiment, machine learning agent learns from its mistakes using another machine learning model called a Reflection LLM.

Reflection improves its accuracy from 75% to 97% after 12 tries. This technique worked well for one of the two attempts, but not both.

It’s still early in this research area but the paper reinforces the idea that more usage will lead to significantly better model performance.

My marketing professor in grad school wrote an equation on the board the first day of class : Innovation = Innovation + Distribution.

In generative AI, innovation & distribution are inextricably linked, feeding each other. More users means a better product. A better product will attract more users.

That’s the moat.